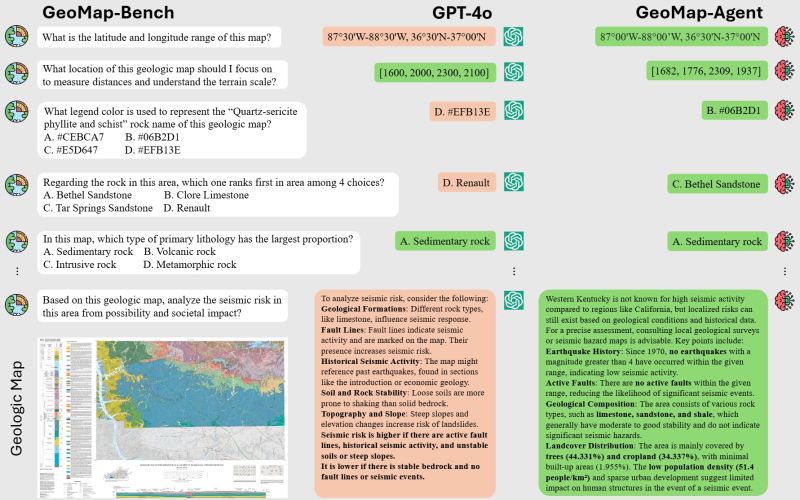

Benchmark dataset of over 3,000 question image pairs for testing AI for geological maps. Microsoft Research, China Academy of Geological Sciences and Wuhan University have developed a benchmark dataset to test Vision Language Models (VLM).

“Geologic maps are essential tools in earthquake prediction, mineral exploration, infrastructure planning, and environmental assessment. They depict layers of Earth’s crust—faults, rock formations, and tectonic boundaries—often in intricate visual detail. When cross-referenced with tectonic activity and data on the crust’s stability, these maps help scientists assess seismic risk and understand long-term geologic trends.

But interpreting these maps isn’t simple. They’re dense with symbols, annotations, and embedded knowledge that even experienced geologists must study carefully. And while AI has made strides in image analysis and text comprehension, it still struggles to handle the complex, multimodal nature of geologic data.

In many ways, geologic maps are languages in themselves. They’re rich in meaning, but both technical and domain-specific understanding are needed to read them well.”

Paper from Huang et al (2025), benchmark dataset and agent link in GitHub and Huggingface in the comments https://www.microsoft.com/en-us/research/articles/peace-project-unlocks-ai-applications-in-geology-using-geomap/

Leave a comment