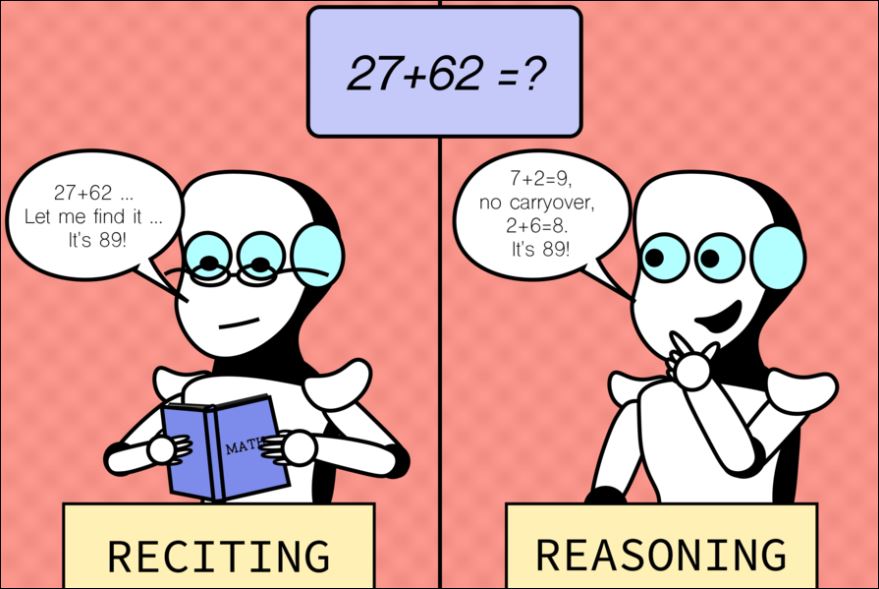

Reasoning skills of large language models are often overestimated: Interesting MIT study showing how LLM’s often do well in familiar scenarios but not in novel ones, illustrating the challenges of moving from memorization to reasoning. Testing on GPT-4, GPT3.5, Claude and PaLM-2 they conclude:

“…it would also be interesting future work to see if more grounded LLMs (grounded in the “real” world, or some semantic representation, etc.) are more robust to task variations.”

Grounding in the real world could include using Retrieval Augmented Generation (RAG), Knowledge Distillation, Fine Tuning on domain authoritative/scientifc papers and Reinforcement Learning from Human Feedback (RLHF).

It may be an interesting area of geoscience research to define a set of geoscience questions with counterfactuals that were relevant (genuinely useful) for a specific set of use cases. Then benchmark generic language model to various versions of geoscience domain language models, to test how novel reasoning might be improved. This could help deepen understanding and stimulate creativity within certain geoscience use cases.

Wu et al (2024) MIT paper here: https://arxiv.org/pdf/2307.02477

Image: Alex Shipps/MIT CSAIL (https://news.mit.edu/2024/reasoning-skills-large-language-models-often-overestimated-0711)

Leave a comment