To understand domain terminology effectively in areas like healthcare and geoscience, domain training has been shown to improve results. https://www.nature.com/articles/s41586-023-06291-2

BERT-E

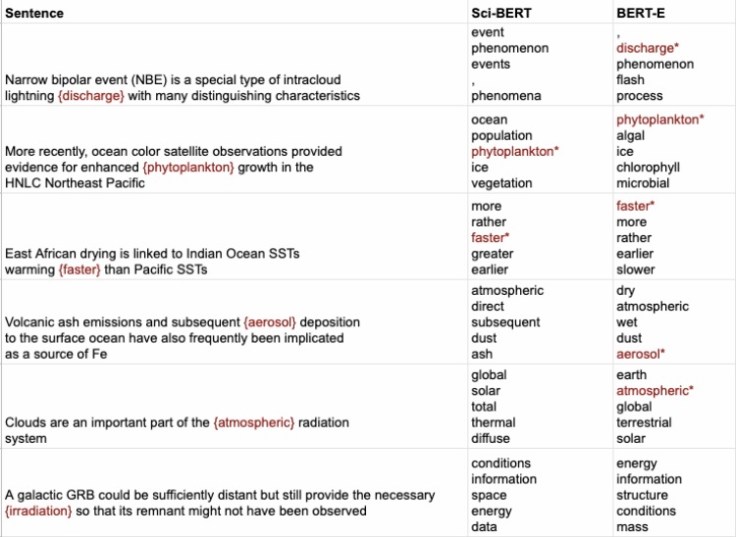

The NASA IMPACT team published a paper at AGU back in 2021 on BERT-E an Earth Science trained language model (270k articles) comparing to Sci-BERT (see screenshot). https://agu2021fallmeeting-agu.ipostersessions.com/default.aspx?s=9D-AC-B5-BA-E8-8D-CE-44-5F-17-8E-3F-B5-16-0E-60

The model may be superseded by the likes of GPT-4 in 2023, but the Masked Language Model (MLM) is published for use in Huggingface and BERT’s embeddings may prove useful. https://huggingface.co/nasa-impact/bert-e-base-mlm

GeoBERT

In 2021 ExxonMobil created their own GeoBERT using 20 million of their own documents for question answering and summarisation. https://onepetro.org/SPEADIP/proceedings-abstract/21ADIP/3-21ADIP/474196

This is not to be confused with Geo-BERT in Huggingface https://huggingface.co/k4tel/geo-bert-multilingual Liu (2021) which detects geographical (not geological) information.

GilBERT

In 2019 Schlumberger trained a BERT model GilBERT (Geologically informed language modelling) using Norwegian oil & gas well reports, Textbooks and other non specified open access reports. Although they don’t mention the number of reports, they do state 4 Million words. This sounds a lot but it is not.Equating to probably just 1,000 reports!!

Their fine tuning transfer learning work was not so successful so they created a BERT model just from geological information. https://openreview.net/pdf?id=SJgazaq5Ir

Combined with some anecdotal information I have observed in practice within multinational companies, it leads me to the following assertion.

The volume and diversity (representativeness) of the training data is paramount. Poor results in language models and text embeddings (word vectors) are often due to using far too small training sets and/or which are not representative for the use case in mind.

Leave a comment