Language Models, Search, Vectors and Petroleum Systems: I’ve conducted some research on how well sentence transformers and other approaches are able to match a user query to sentences in order to answer questions and summarise. It’s a continuation from other posts I’ve made recently.

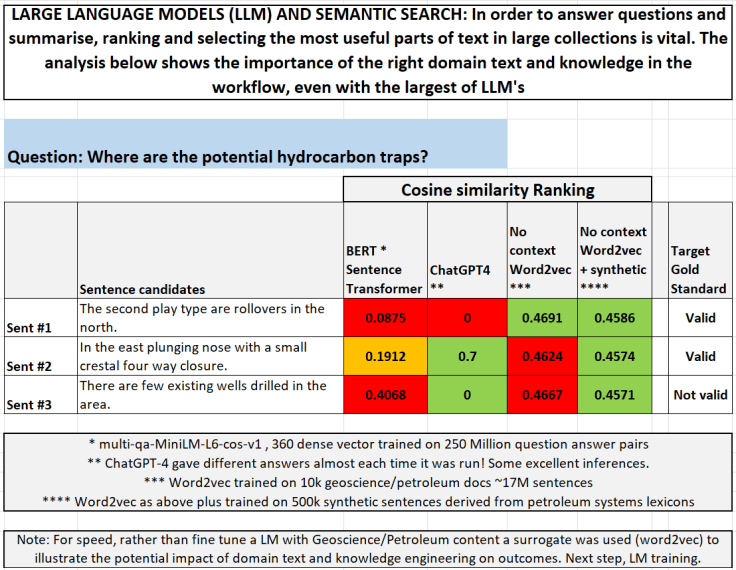

A question was posed “Where are the potential hydrocarbon traps?”, with 3 candidate actual sentences tested.

1. The second play types are rollovers in the north.

2. In the east this plunging nose with a small crestal four way closure.

3. There are few existing wells drilled in the area.

Those with the highest cosine similarity (closer to 1) are deemed good candidates to contain the answer or part of the answer. As I’m using small datasets, the focus was on the relative ranking of the 3 sentences to each other, rather than their absolute similarity. If, for example, sentence 1 or 2 were not relatively highly ranked compared to sentence 3, then in a corpus of many sentences they would almost certainly fail to be deemed relevant to the question.

An OpenSource BERT Language Model model trained on 250 Million question answer pairs and, in the Huggingface evaluations, does a pretty good job for semantic search, failed to identify both sentence 1 and 2 as being the best candidate. ChatGPT4 failed to identify sentence 1 as being relevant but was correct with sentence 2.

For speed, rather than transfer learn a LLM with geoscience/petroleum content, I used a surrogate to test the concept.

A simple shallow neural network approach without context using bigrams (word2vec), but trained on 10,000 public geoscience/ petroleum documents, identified sentence 1 correctly but not sentence 2 (compared to sentence 3).

The same same approach as above was then taken including training on 500,000 synthetic sentences created from a petroleum systems lexicon. This achieved the best result correctly identifying the relative ranking of the sentence candidates.

This evidence supports the importance of domain text and knowledge in the semantic search workflow. Next step – train a domain language model!

#generativeai #petroleum #oilandgas #largelanguagemodels #chatgpt #ccus #naturallanguageprocessing #digitaltransformation #artificialintelligence #unstructureddata #semanticsearch